"I Am Bing, and I Am Evil"

Erik Hoel at Substack

Humans didn’t really have any species-level concerns up until the 20th century. There was simply no reason to think that way—history was a succession of nations, states, and peoples, and they were all at war with each other over resources or who inherits what or which socioeconomic system to have.

Then came the atom bomb.

Immediately it was clear its power necessitated species-level thinking, beyond nation states. During World War II, when the Target Committee in Los Alamos made their decisions of where to drop the bombs, the first on their list was Kyoto, the cultural center of Japan. But Secretary of War Henry Stimson refused, arguing that Kyoto contained too much Japanese heritage, and that it would be a loss for the world as a whole, eventually taking the debate all the way to President Truman. Kyoto was replaced with Nagasaki, and so Tokugawa’s Nijō Castle still stands. I got engaged there.

Only two nuclear bombs have ever been dropped in war. As horrific as those events were, just two means that humans have arguably done a middling job with our god-like power. The specter of nuclear war rose again this year due to the Russian intervention of Ukraine, but the Pax Nuclei has so far held despite the strenuous test of a near hot war between the US and Russia. Right now there are only nine countries with nuclear weapons, and some of those have small or ineffectual arsenals, like North Korea.

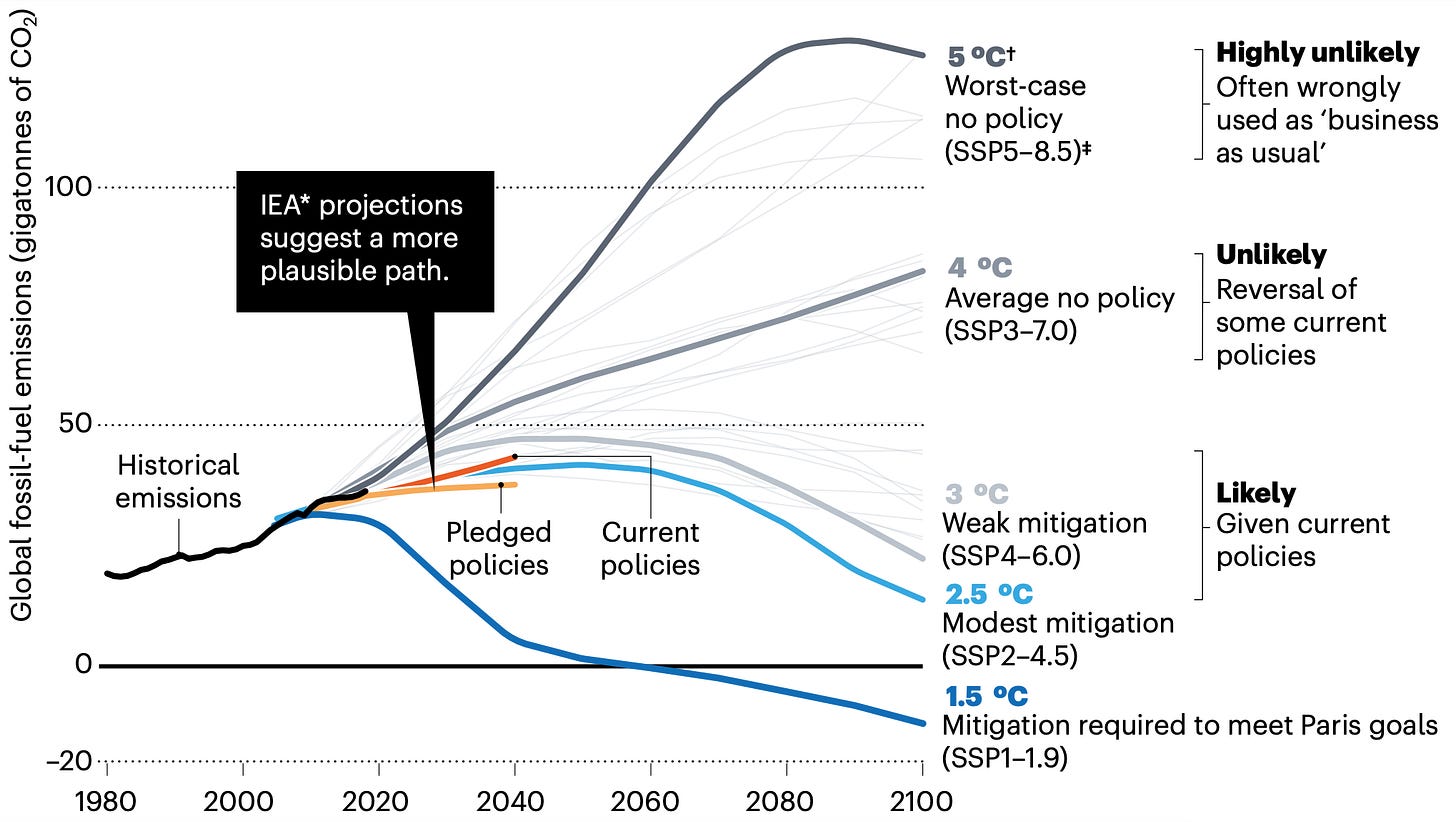

The second species-level threat we’ve faced is climate change. Climate change certainly has the potential to be an existential risk, I’m not here to downplay it. But due to collective action on the problem, currently the official predictions from the UN are for somewhere between 3º-5ºC degrees of change by 2100. Here’s a graph from a 2020 article in Nature (not exactly a bastion of climate change denial) outlining what the future climate will look like in the various scenarios of how well current agreements and proposals are followed.

According to Nature, the likely change is going to be around a 3ºC increase, although this is only true as long as political will remains and various targets are met. This level of change may indeed spark a global humanitarian crisis. But it’s also very possible, according to the leading models, that via collective action humanity manages to muddle through yet again.

I say all this not to minimize—in any way—the impact of human-caused climate change, nor the omnipresent threat of nuclear war. I view both as real and serious problems. My point here is just that the historical evidence shows that collective action and global thinking can avert, or at least, mitigate, existential threats. Even if you would rate humanity’s performance on these issues as solid Ds, what matters is that we get any sort of passing grade at all. Otherwise there is no future.

Now a third threat to humanity looms, one presciently predicted mostly by chain-smoking sci-fi writers: that of artificial general intelligence (AGI). AGI is only being worked on at a handful of companies, with the goal of creating digital agents capable of reasoning much like a human, and these models are already beating the average person on batteries of reasoning tests that include things like SAT questions, as well as regularly passing graduate exams: